ich habe noch ein wenig nachgeforscht und nun eine gute Erklärung gefunden, warum GUIDs schneller sind als IDENTITYs…

Die Methode NEWSEQUENTIALID() ist ein Wrapper einer Windows API Methode (UuidCreateSequential).

Siehe hier: https://msdn.microsoft.com/de-de/library/ms189786%28v=sql.120%29.aspx und https://msdn.microsoft.com/de-de/library/aa379322%28VS.85%29.aspx

Daher wird auch innerhalb des SQL Servers kein Caching (= Logging) der zuletzt benutzten ID vorgenommen.

Bei IDENTITY ist hard kodiert ein Caching für jeden 10. Wert vorgesehen.

Daher ist hier ein großes Caching-Aufkommen = Logging vorhanden.

Bei einem Restore der DB prüft die Engine den letzten eingetragenen Wert und nimmt den nächsten verfügbaren (erzeugt kein Gap in den IDs).

Zumindest in der Theorie. In der Praxis gibt es ja den Bug mit dem Reseed, bei dem Sie sogar einen Kommentar im connect hinterlassen haben J.

Bei SEQUENCEs ist der Default-Wert fürs Caching 50.

Daher ist jede Sequence bei 1 Mio Zeilen schon mal etwa 1-2 Sekunden schneller als IDENTITY.

Wenn der Wert fürs Caching maximiert wird, dürften Sequences genau so schnell wie GUIDs werden.

Bei einem Restore der DB ist ein (großer) Gap bei den IDs zu erwarten (und zwar entsprechend der nicht genutzten Werte des noch offenen Caches).

Ein guter Artikel der die Performance der SEQUENCEs im Vergleich zu IDENTITYs beleuchtet ist hier:

http://sqlmag.com/sql-server/sequences-part-2

Hier ist noch ein weiterer interssanter Artikel: http://blogs.msdn.com/b/sqlserverfaq/archive/2010/05/27/guid-vs-int-debate.aspx

Test scenario for GUIDs vs. IDENTITY vs. SEQUENCE

See also…

http://www.sqlskills.com/blogs/kimberly/disk-space-is-cheap/

http://www.codeproject.com/Articles/32597/Performance-Comparison-Identity-x-NewId-x-NewSeque

RML Utility for parallel queries… https://support.microsoft.com/en-us/kb/944837

Set up a Table with 6.7 Mio Rows with Sales data (real scenario table).

Add Clustered Index with:

ID (UUID/GUID/SEQUENCE/IDENTITY)

Add 2 Non-Cl. Indexes for other columns.

Use Foreign Keys to Customer

Foreign Key for SalesDetails to new Sales table.

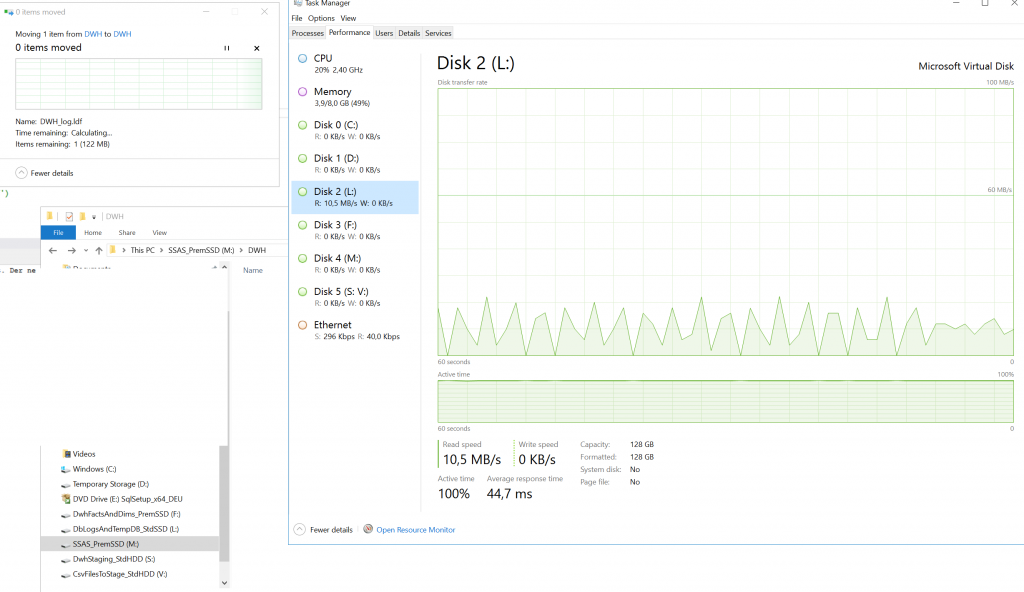

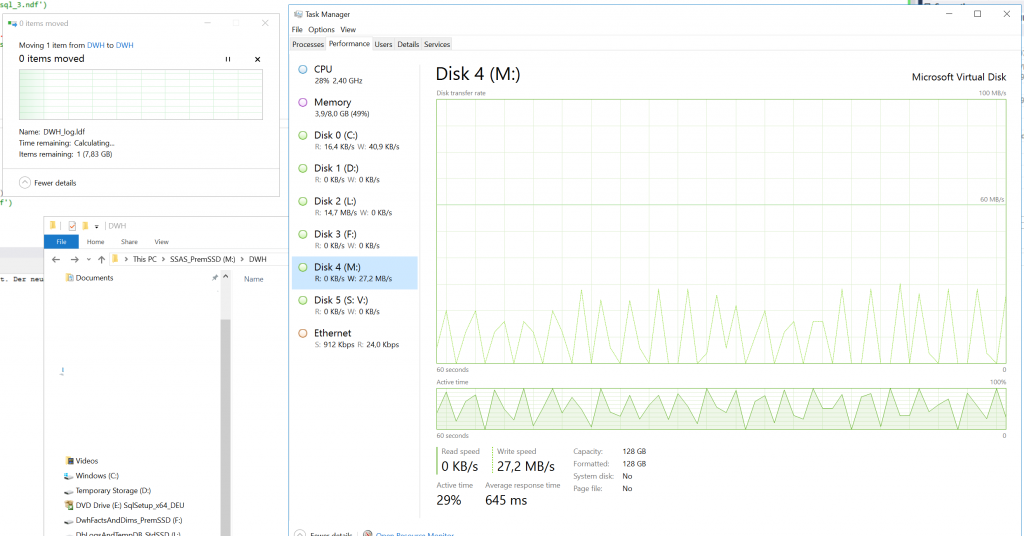

Set Database and Log File Size to 5GB.

Try different Fill Factors (100 vs. 90 vs. 80 vs. 70 vs. 60 vs. 50 vs. 40 vs. 30 vs. 20)

– Insert 100.000 Rows using one Batch

– Insert 1.000 Rows in 100 concurrent sessions at once

- Measure inserting

- Measure Selecting using an INNER JOIN on SalesDetails and Customer

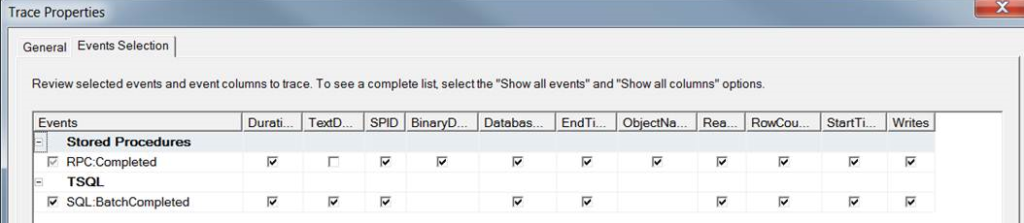

Measure IOs using SQL Server Profiler (TSQL_Duration):